Unveiling the Stability of Flash Attention in Machine Learning: A Comprehensive Analysis

Introduction

In the realm of large-scale machine learning models, stability during training is paramount. Recent reports from organizations training state-of-the-art Generative AI models have highlighted cases of instability, often manifesting as sudden spikes in loss metrics. One potential culprit behind this phenomenon is numeric deviation, a challenge exacerbated by the size and complexity of modern workloads. Despite its recognized significance, quantifying numeric deviation’s impact remains elusive due to the costly nature of training runs.

Background

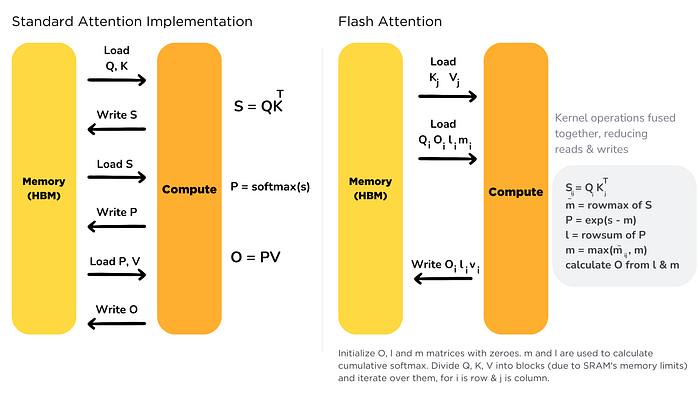

Training large-scale machine learning models presents unique challenges, particularly concerning stability. Numeric deviation, characterized by fluctuations in numerical values during computation, has emerged as a significant concern in this context. Flash Attention optimization, a widely-adopted technique in training models, introduces a particular focus of investigation due to its potential implications for stability and performance.

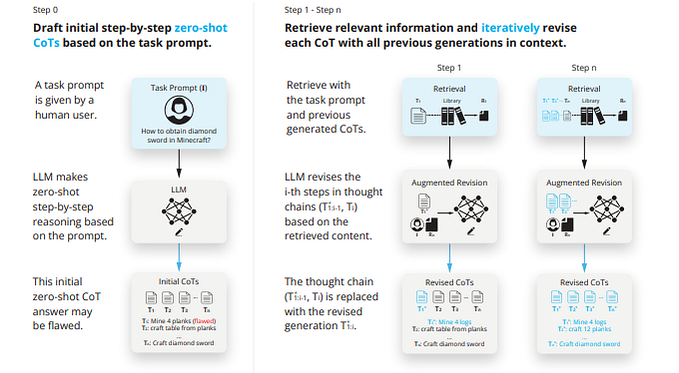

Methodology

To address the complexities of numeric deviation and its implications, the authors of the study developed a principled approach. This methodology involves constructing proxies to contextualize observations when direct quantification of downstream effects is challenging. Moreover, a data-driven analysis leveraging the Wasserstein Distance offers insights into the impact of numeric deviation on model weights during training.

Case Study: Flash Attention vs. Baseline Attention

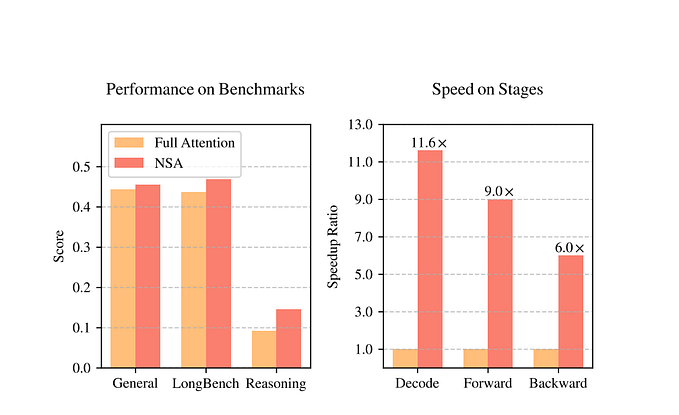

A critical aspect of the study involves comparing Flash Attention and Baseline Attention, particularly in terms of numeric deviation. During isolated forward passes, Flash Attention exhibits approximately an order of magnitude more numeric deviation compared to Baseline Attention at BF16 precision. However, the data-driven analysis reveals that the numerical deviation in Flash Attention is 2–5 times less significant than low-precision training methods.

Implications and Future Directions

The findings of the study carry significant implications for the stability and performance of machine learning models trained using Flash Attention optimization. Understanding the nuances of numeric deviation can inform strategies for mitigating instability and optimizing training processes further. Additionally, the study underscores the importance of ongoing research efforts to address system challenges and advance the field of large-scale machine learning.

Conclusion

In conclusion, the study sheds light on the stability of Flash Attention in machine learning training. By elucidating the effects of numeric deviation and providing insights into its impact on model weights, the research contributes to our understanding of training dynamics. Moving forward, collaboration and continued research endeavors are essential to enhance the stability and efficiency of training large-scale machine learning models.