Enhancing Reasoning in AI: RAT Approach for Long-Horizon Generation

Large language models (LLMs) have shown remarkable progress in various tasks involving natural language processing. However, a significant challenge remains: ensuring factual accuracy and mitigating hallucinations in complex reasoning scenarios, especially for long-horizon generation tasks. These tasks involve multi-step reasoning and require LLMs to break down the problem into a series of steps.

The RAT (Retrieval Augmented Thoughts) approach tackles this challenge by combining two established techniques:

- Chain-of-Thought (CoT) Prompting: This method encourages LLMs to generate a step-by-step thought process alongside the final answer. This provides a “scratchpad” for complex reasoning and helps humans understand how the LLM arrived at its answer.

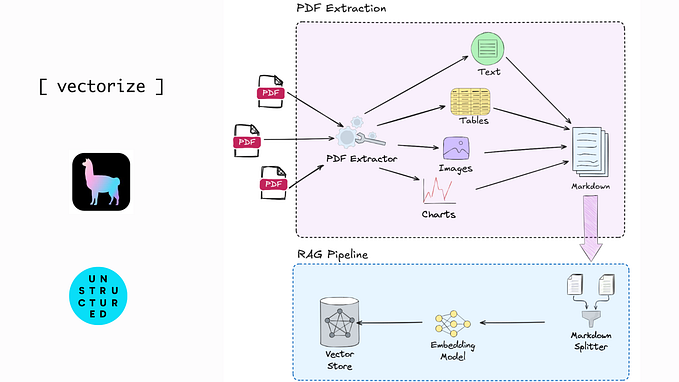

- Retrieval-Augmented Generation (RAG): This technique leverages external knowledge sources to improve the factual grounding of LLM responses. It retrieves relevant information from a vast corpus of text based on a given query.

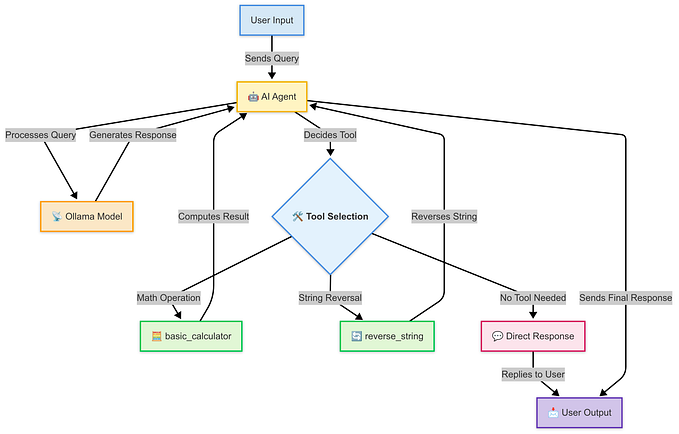

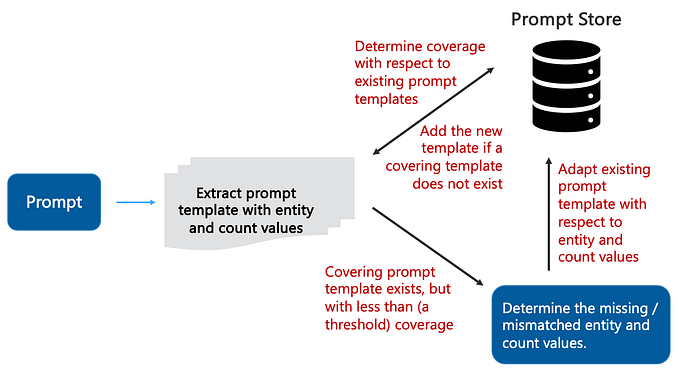

The RAT approach integrates these techniques to create a more robust and informative reasoning process for LLMs:

- Initial CoT Generation: The LLM first generates a sequence of thought steps in response to a prompt, outlining the steps needed to complete the task.

- Iterative Revision with Retrieval: Each thought step is then revised one by one. The model uses the task prompt, all previously generated and revised thought steps, and the current thought step itself to formulate a query. This query is used to retrieve relevant information from an external knowledge base.

- Refined Thoughts: The retrieved information is then used to revise the current thought step, ensuring factual accuracy and mitigating potential hallucinations.

- Final Response: Depending on the task, the revised thought steps can be used directly as the final output (e.g., embodied task planning) or serve as a guide for the LLM to generate the final response in a step-by-step manner (e.g., code generation, creative writing).

This iterative approach allows the LLM to refine its reasoning process continuously, leveraging relevant external knowledge at each step. The core strengths of RAT lie in:

- Improved Factual Accuracy: By incorporating external knowledge, RAT helps mitigate hallucinations and ensures the generated responses are grounded in reality.

- Context-Aware Reasoning: The iterative revision process allows the LLM to consider all previously generated steps and revise its reasoning accordingly, leading to more contextually relevant outputs.

- Targeted Correction: Unlike revising the entire CoT with RAG, RAT revises each step individually, minimizing the risk of introducing errors into accurate parts of the reasoning process.

The Effectiveness of RAT

The research team behind RAT has evaluated its effectiveness on various long-horizon generation tasks, including:

- Code generation

- Mathematical reasoning

- Embodied task planning (e.g., navigating a virtual environment)

- Creative writing

The results demonstrate that RAT significantly improves the performance of LLMs on these tasks compared to traditional CoT prompting and RAG approaches.

The Future of Long-Horizon Generation

RAT represents a significant step forward for LLMs in complex reasoning tasks. By enabling them to leverage external knowledge and refine their reasoning step-by-step, RAT paves the way for more robust, informative, and trustworthy AI applications. Future research directions are likely to focus on:

- Expanding Knowledge Sources: Exploring different knowledge sources beyond text corpora to further enhance the factual grounding of LLM reasoning.

- Reasoning Beyond Text: Investigating how to incorporate other modalities like images and videos into the retrieval process for a richer understanding of complex tasks.

- Explainable AI: Developing techniques for LLMs to explain their reasoning process along with the final output, fostering greater trust and transparency in AI-powered solutions.

Overall, RAT presents a promising approach for enhancing LLM reasoning in long-horizon generation tasks. By combining the strengths of CoT prompting and RAG, it paves the way for the development of more reliable and informative AI systems.

Here’s Link to the official Paper: Link